Hello! 👋

I am Matteo, a researcher in machine learning.

My research focuses on reinforcement learning applied to multi-agent and multi-robot systems.

I am currently working on training LLM agents for long-horizon tasks using reinforcement learning at Meta.

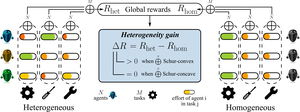

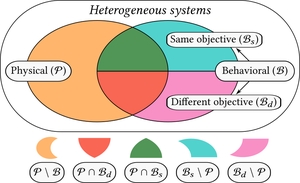

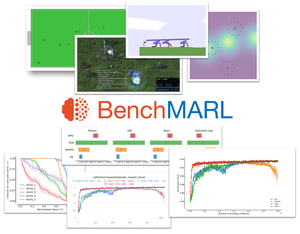

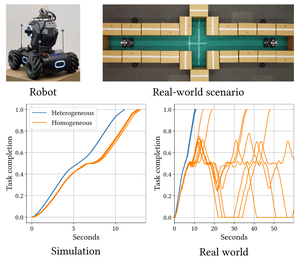

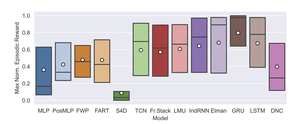

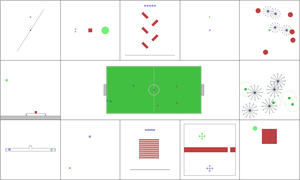

I obtained my PhD from the Prorok Lab at the University of Cambridge, were I studied heterogeneity in multi-agent and multi-robot systems and developed the VMAS simulator. For this research, I employed techniques from the fields of Multi-Agent Reinforcement Learning and Graph Neural Networks. During my PhD, I joined PyTorch at Meta, where I created BenchMARL and helped develop TorchRL.

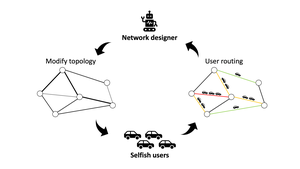

For my master, I investigated the problem of transport network design for multi-agent routing.

See my CV and the (more verbose) academic CV.

- Reinforcement Learning

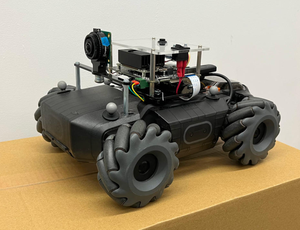

- Multi-Robot Systems

- LLM Agents

- Heterogeneous Multi-Agent Learning and Coordination

- Graph Neural Networks

PhD in Computer Science, 2025

University of Cambridge

MPhil in Advanced Computer Science, 2021

University of Cambridge

BEng in Computer Engineering, 2020

Politecnico di Milano

Featured Publications

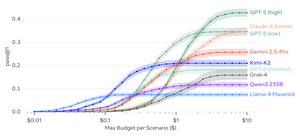

We introduce Meta Agents Research Environments (ARE), a research platform for scalable creation of environments, integration of synthetic or real applications, and execution of agentic orchestrations. We also propose Gaia2, a benchmark built in ARE and designed to measure general agent capabilities. Unlike prior benchmarks, Gaia2 runs asynchronously, surfacing new failure modes that are invisible in static settings.

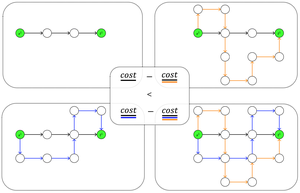

We introduce Diversity Control (DiCo), a method able to control diversity to an exact value of a given metric by representing policies as the sum of a parameter-shared component and dynamically scaled per-agent components. By applying constraints directly to the policy architecture, DiCo leaves the learning objective unchanged, enabling its applicability to any actor-critic MARL algorithm. We theoretically prove that DiCo achieves the desired diversity, and we provide several experiments, both in cooperative and competitive tasks, that show how DiCo can be employed as a novel paradigm to increase performance and sample efficiency in MARL.

Publications

To find relevant content, try searching publications or filtering using the buttons below.

*